It’s a brave new world with AI (artificial intelligence) finding its way into the lives of anyone who interacts with technology on a daily basis. If you have a smartphone in your pocket, you likely have some form of AI-enabled technology on it, maybe even several. From virtual assistants like Siri and Alexa to more recent tools like chatbots and image filters, AI has grown beyond novel uses and is now a realistically useful component on consumer devices.

Adobe, known for its imaging apps like Photoshop and Illustrator, is not a stranger to adding AI-based features to its image editing tools. Years before the current AI boom, Adobe was already developing tools that used a form of AI to quickly edit photos. Their “content aware” features have allowed users to simply select a part of a photo and have something removed from the image and replaced with a recreation of the background behind that object. The software is able to interpret the surrounding background space and replace objects with more of that same kind of space, not unlike what the latest AI image editing tools do.

Where Adobe has made leaps in AI image editing, however, is their recent release of a Beta version of Photoshop that includes a generative AI feature based on their Firefly suite of tools. This new feature has been met with a mix of excitement and skepticism since the capability that this technology brings to Photoshop users is so impressive at making complex edits to images that some believe it could replace human designers and image editors.

Before we look at what Adobe’s generative AI can do, let’s get a better understanding of what it is.

What is Generative AI?

Generative AI is any form of artificial intelligence that can create data, including images, videos, audio, and text. Generative AI can create something in a blank document using only the prompt provided by the user and does not rely solely on the existing content of that document to inform what the AI can make. Generative AI in photography and illustration can start with a blank artboard and create an entirely new image. But in the Photoshop version of generative AI, the results are best when parts of the existing image are used, giving the AI a better starting point and reference material.

What Can Photoshop Generative AI Do?

1. Remove or replace an image background. The generative AI toolbar in the current beta release of Photoshop includes a text field and buttons to trigger the algorithm for the generative process, along with some selection tools. Using these tools (some of which were part of previous releases of Photoshop), the user can select the subject of the image and invert that selection to select just the background. With the background selected, the user can prompt the AI to change or edit that background. We tested this using the Barn of Brands here at Ridge Marketing and placed the barn in some new locations, including on a beach.

2. Re-render a subject in a new way. Using the same selection tools mentioned above, users can select the main subject of an image and use generative AI to re-render that subject as something different. Again using the Ridge barn as an example, we prompted Photoshop to recreate the barn as a log cabin.

3. Extend the background of an image. Possibly one of the most useful tools in the day-to-day workflow of many creative professionals is the ability to quickly extend the background scene of an image to create more usable space. One example would be website hero images, which often require significant horizontal space to fill a large area. Photos that were previously unusable in certain scenarios because of limited horizontal dimensions are now possible with AI-generated backgrounds and content.

4. Remove people and objects from a photo. Photoshop has had a Content Aware Fill feature that can remove objects from an image for some time now, but it often leaves some visual artifacts behind and struggles with complex backgrounds. Generative AI is far more effective in removing an object or person from an image and then generating the space that would exist behind that object without the visual artifacts or other distortions that look artificial.

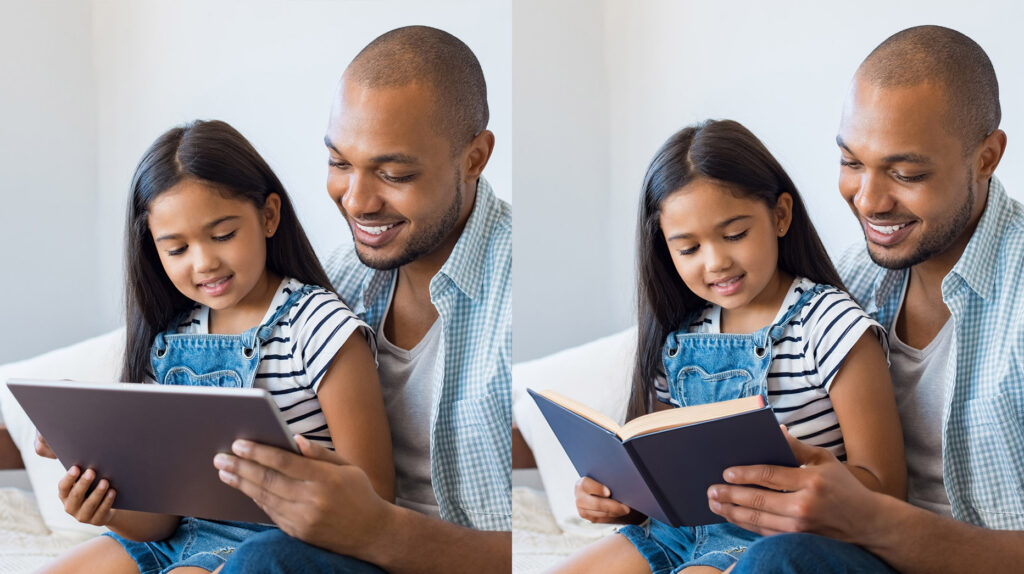

5. Change details within an image. While tasks like changing an article of clothing or replacing an object in a photo with something else are possible in the hands of a skilled Photoshop user, generative AI makes these tasks much faster and easier to accomplish. Using the selection tool to identify an object in an image that you want to replace, such as the tablet below, generative AI can replace it with your text-promoted suggestion.

What Photoshop Generative AI Can’t Do

1. AI Struggles With Editing People and Animals

As powerful as Adobe’s new generative AI tool is, it struggles in one key area: Generating images of humans and animals, and editing human features. The algorithm is well-versed in building things into images that weren’t there before when the space being filled requires inorganic objects or backgrounds. Anything solid, from wood to cement, fabric, foliage, and land masses can easily be added to an image by the generative AI tool.

Adding a person into an image, however, seems to be complicated enough to trip up the AI. Human images end up looking less than human, often with impossible body shapes and proportions or extra appendages.

The same applies to animals. You can prompt the AI to generate an animal and often get what you asked for, but our tests for things like squirrels and sheep resulted in some three-eyed squirrels and a two-headed smiling sheep.

2. AI Can’t Replace Graphic Designers

Adobe’s AI tools also won’t do one other thing that some critics forecast it might: Replace human designers and image editors. What is so impressive about these tools is that they can speed up some of the more tedious processes of image editing, but they can’t do that without human intervention, and the results often still require some manual editing to finish.

The tools within Photoshop also work best when generative edits are made incrementally, starting with broad strokes (replacing a background or extending the dimensions of the scene) and then working on the smaller details to fine-tune the content. This process still depends on the human eye to refine an image for use in a layout.

The end result is still just a photo. It may be an impressive new photo that looks dramatically different in a very short period of time, especially relative to the amount of time that similar editing would have taken without the help of AI. The usefulness of a photo in marketing is based on what that photo is used for, featured in web design, an ad layout, or an email campaign.

Is AI a Threat to Designers?

When Photoshop first started gaining in popularity, some critics lamented that Photoshop would take jobs away from photographers and photo processors, since digital editing tools were more accessible and could help anyone make mediocre photos look more professional, forgoing the need to hire a skilled expert.

We now know that professional photographers are still in high demand and although the business of photography and post-processing has certainly changed because of tools like Photoshop, those tools did not take jobs away from anyone willing to adapt to the new digital workspace.

Every tool used today in design has made it easier to create increasingly better images, but the photography process still starts with the skills and capabilities of a photographer and ends with designers using these tools effectively to build a compelling image and use that image in marketing materials.

Helpful Resources

Adobe Firefly – More information from Adobe about their Firefly suite of generative AI tools.

Adobe Unveils Future of Creative Cloud With Generative AI as a Creative Co-Pilot in Photoshop